Evaluating Your LLM-Based Tool

Questions to Ask When Buying or Building a Tool Built on an LLM

Lots of people are selling LLM-based products these days, but it can be difficult to tell which ones are useful and which ones aren’t–especially if you have a very niche use case.

I wrote earlier about the broad benchmarks that HuggingFace is using to evaluate its top open-source models: what each of those benchmarks actually means, and how the open source models compare to the OpenAI models. If you’re looking for the most broadly capable models, these benchmarks are a good starting point.

If you're interested in a tool tailored for a specific task or set of tasks, you'll need a different approach.

Assessing Task-Specific LLM Tools: The Three Key Questions

There are various types of narrow or task-specific tools that you can build with an LLM. Some examples include a chatbot assisting customers, a model that summarizes the technical resumes of candidates, or a system that tags USAJobs postings against a list of pre-defined occupational categories like “data analyst” and “data engineer.”

The base model is relevant for the performance of your tool, but it’s not the whole story. Your tool-specific evaluation should center on:

Initial Performance: How well does the tool perform on its initial test data?

Adaptability: How does the tool respond when presented with unexpected data?

Ongoing Monitoring: Are there ongoing checks for whether the model is working with new data? Is there a process for improvement?

The importance and systematic approach of each assessment component will vary based on your particular use case. If you have a tool that’s customer-facing, you should have extremely thorough responses to all of these questions. If you’re providing a tool internally to a group of employees to assist them with a low-stakes process, then building it and getting user feedback as the major form of assessment may be a more reasonable answer.

For this post, I’ll focus on the first part: how do you initially evaluate your LLM-based tool?

Defining Success for Your LLM Tool

To evaluate your tool’s performance, you must define what constitutes success or failure.

This could involve using pre-labeled data and automated comparisons, after-the-fact human rating, or a combination of both methods.

Here are some examples of what that evaluation process could look like for different use cases.

Scenario One: Customer Query Handling

In this scenario, you’re using an LLM to address straightforward customer queries by sending pre-written replies, while redirecting complicated questions to human operators.

Imagine you have a chat tool on your website. A customer asks when the store is open. You want the chatbot to reply with a pre-written set of text which includes the store hours. It will do this by categorizing a question about store hours as being about store hours, and then the next step of the chatbot matches “store hour query” to a pre-written text snippet (“Our store opens at 8am and closes at 6pm.”) and uses that to answer the customer. If the customer asks whether a new store is going to be opening in their neighborhood, the LLM will characterize this as not being something where there’s a pre-written response, and pass it off to a human operator to answer.

In order to evaluate this chatbot, you could compare the chatbot’s response against a dataset of past interactions with customer service agents. To do this, we could feed the chatbot the conversation openings that customers have had in the past with the customer service agents. Then, you could compare the historical human answers with the chatbot answers. In what percentage of store hour queries does the chatbot correctly respond with the text about store hours?

You can also evaluate the types of mistakes made. How often did it incorrectly respond with store hours when it should have responded with either a different pre-written text, or by handing off the user to a person?

Scenario Two: Resume Summarization

You might want to use an LLM to summarize the technical background of resumes for software development jobs. The input is the full resume, the output is a brief summary of the candidate’s professional software development experience.

There are a couple of different ways you could do evaluation. But one way looks like the following:

Have one person or group of people perform the same task as the LLM, generating brief technical summaries.

Have another person or group of people blindly rate the usefulness of the human-created summaries vs. the LLM summaries.

Once you have a comparison of the accuracy of the LLM-generated and human-generated summaries, you’ll have a better sense of how much fidelity you might lose (or gain!) by switching to an LLM, and whether that change is acceptable for your business.

You can also use the same type of evaluation process if you’re considering different LLMs or different prompts for your resume-writing tool: in all of these cases, you can use human raters to rate your tool.

Scenario Three: Multi-Label Classification (e.g., Job Post Tagging)

In this scenario, you’re taking government job listings and trying to make them more useful and searchable by tagging each of them with multiple occupational labels based on the content of the job ad. For example, the same job listing might be tagged as “budget analyst”, “data analyst”, and “researcher”. The LLM must choose from a pre-set list of possible occupations.

In order to evaluate this tool, you could have experts annotate a selection of the same job listings and give them the same pre-set list of occupations as we gave the LLM. We could then calculate agreement scores both between the experts, and between the experts and the LLM. The output in each case could be the percentage of overlap between responses.

Automated Text Comparison Methods

Needing people on hand to evaluate the output of LLMs, as with the resume summarization example, is a major limitation. It also means that when you want to test out new models or prompts, they have to do it all over again. If you can automate your comparisons between your expected answer and observed answer, then you can use them to test each new model or prompt without people having to do a bunch of manual evaluation work each time.

Fortunately, there are methods for automated text comparisons.

Exact Matching

This technique assesses if the generated output aligns perfectly with the expected result. For instance, if you’re looking for a “yes” or “no”. You can also use this for multi-label classification, like in the case of tagging job postings with a list of occupations: if you expect the tool to return a list containing “data analyst”, “data scientist”, and “data engineer”, how many of these exact labels does it actually return?

This technique works well when you’re getting back extremely structured data (like a “yes” or “no”) and success or failure is binary.

Fuzzy Matching

Instead of an exact match, this method focuses on the presence or absence of certain types of text patterns.

For instance, when classifying job titles, instead of pinpointing a perfect match, you could gauge the presence of terms like “data” or “engineer”. Depending on the task, you might search for an exact substring (like “data”) or a more complicated text pattern, like a regular expression.

Text Similarity

Text similarity algorithms are designed to determine the likeness between two sets of text.

Syntactic Similarity: Measures structural similarity. One common method is 'edit distance', which calculates the minimal number of character changes required to make two strings identical. For instance, the edit distance between “moral” and “mortal” is 1: you just have to add a “t”.

Semantic Similarity: Measures the closeness in terms of meaning. You could use a semantic model to compare human-generated resume summarization text with LLM-generated text. However, this pushes your evaluation process back a step – you need to make sure that whatever tool you use for this works on your use case.

Evaluation Via an LLM

You can use LLMs to compare multiple texts.

Give two pieces of text to an LLM and ask about their similarity, either in general or on a particular metric. For instance, you could use this with your human vs. LLM-created resume summaries, in addition to the original resume, and ask it to assess the degree to which they’re returning the same output. But, as with the previous method, now you also need to evaluate your evaluation method.

Ways to Automate Other Types of Text Evaluation

For some use cases, evaluating LLM-based results isn't just about checking for correctness. For instance, if you have a chatbot that takes user inputs, if a user says something negative or abusive, does the chatbot reciprocate?

There are a couple of different measurements which can be used to assess this.

1) Toxicity: Different people have different ideas about what constitutes toxic content. But if you want to get started, there’s a Python library called detoxify that you can use to quickly label text with various toxicity-related tags.

2) Sentiment analysis: This classifies the tone of a piece of text, determining whether it’s positive, neutral, or negative. The NLTK Python library is a standard tool for this, and it also has an R wrapper. Additionally, the HuggingFace platform offers various models for performing sentiment analysis.

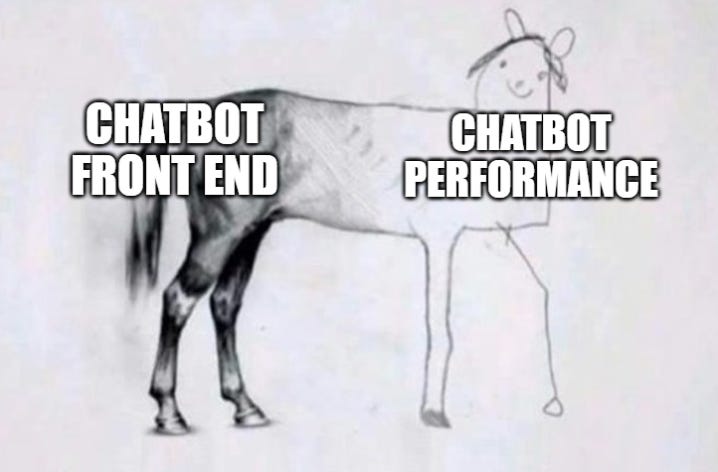

The Speed of LLM Tool Creation vs. The Need for Rigorous Assessment

Building a tool based on an LLM tool can be astonishingly fast. You might build a chatbot which lets you query some documents or a classification model for resumes in an afternoon.

This rapid prototyping is one of the most compelling strengths of LLMs. Instead of having to label thousands of observations before you can build a model and find out that it’s not going to work, you can much more quickly identify good ideas and dismiss bad ones.

But just because you (or your vendor) can quickly build an LLM tool that appears functional doesn’t mean it’s ready to be deployed to users. The assessment phase might eclipse the building phase in terms of time and effort. And it should!

The degree of your assessment should be proportionate to the cost of getting it wrong. If you're rolling out a tool for external users or customers, the stakes are high. If you’re releasing something internally and making clear that it’s in beta, you may conclude that monitoring adoption rates or soliciting feedback can serve as your major assessment tools.

The good news is you don’t have to invent assessment tools from scratch. There are a variety of evaluation tools and methods which already exist and can form the building blocks of a robust assessment protocol which is specific to your tool and task. If you’re building tools yourself, you can incorporate them.

And if someone is trying to sell you something, you can ask them how they’re using these types of tools to assess performance.