The Pattern I Use in Every LLM Project

Pydantic and LiteLLM for structured output

Every time I process text with LLMs—whether it’s analyzing public comments, matching YouTube videos to congressional hearings, or summarizing research papers—I use the same pattern to get my output from the model.

In the early days of ChatGPT, I had projects where half my prompt was begging the model to ONLY SAY YES OR NO or GIVE THIS TO ME AS A LIST. Sometimes it worked. Often it didn’t, and I was stuck cleaning up the output. The model would give me a paragraph about why something might be yes or no, when all I needed was the answer.

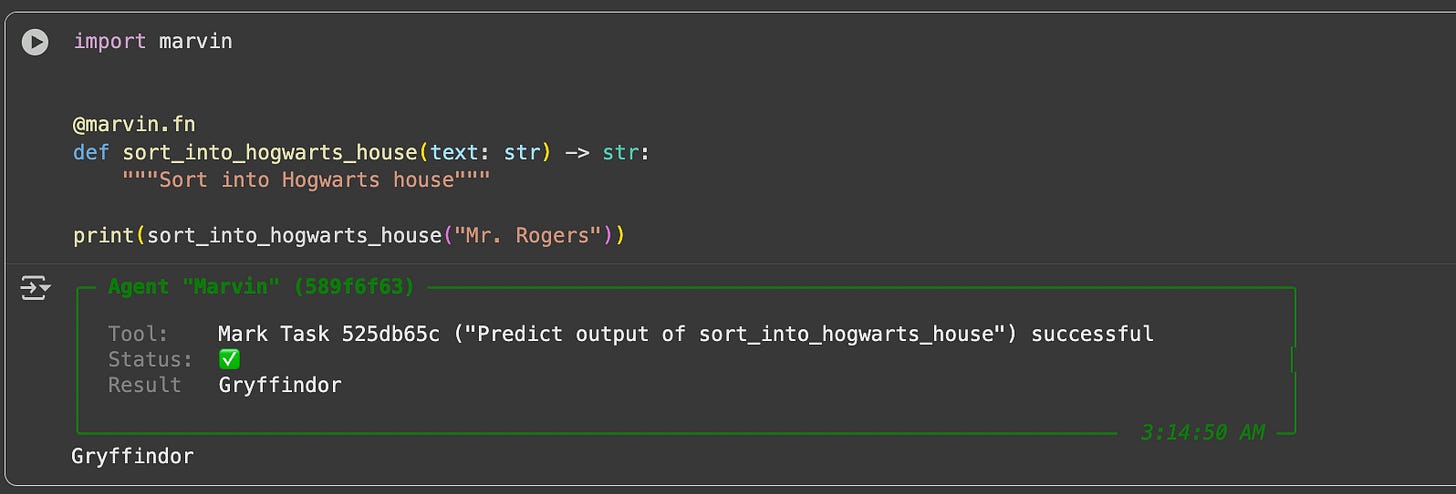

Then the Marvin library came out and fixed this for OpenAI models: instead of begging or lecturing, you could just describe the behavior of the LLM and your output would come back formatted correctly. I heard Adam Azzam talk about it at a meetup, and that weekend I rebuilt my project. Suddenly, I could define exactly what structure I wanted back, and the model would comply.

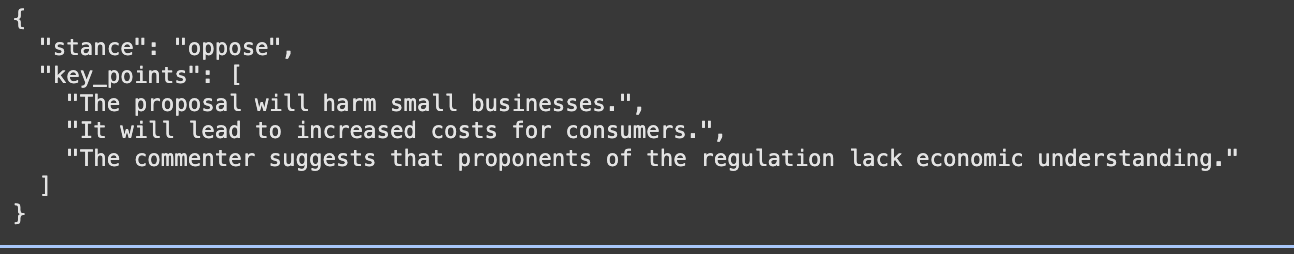

Now this general pattern—where you describe the behavior you want—is built into many LLMs natively. Here’s what it looks like, using LiteLLM, a library that provides a consistent interface across different LLM providers. In the following example, we use an LLM to characterize text as support, oppose, or neutral, and we get a list of the main arguments it's making.

from pydantic import BaseModel, Field

from enum import Enum

import litellm

import json

litellm.api_key=your_api_key # Replace this with your actual key!

class Stance(str, Enum):

SUPPORT = “support”

OPPOSE = “oppose”

NEUTRAL = “neutral”

class CommentAnalysis(BaseModel):

stance: Stance = Field(

description=”’support’ if favoring proposal, ‘oppose’ if against, ‘neutral’ if no clear position”

)

key_points: list[str] = Field(

description=”Main arguments from the comment”

)

comment_text = “”“I can’t believe anyone is considering this.

It will hurt small businesses and increase costs for consumers.

Read an economics textbook, losers.”“”

response = litellm.completion(

model=”gpt-4o-mini”,

messages=[

{”role”: “system”, “content”: “Analyze public comments on regulations. Determine stance (support/oppose/neutral) and extract key points.”},

{”role”: “user”, “content”: f”Analyze this comment: {comment_text}”}

],

response_format=CommentAnalysis,

temperature=0.0

)That’s the core of it. The model returns the structure you defined, with stance limited to your three options, and with a list of arguments.

Important caveat: This is just about getting your output structured correctly—the content might still be wrong!

Why This Works So Well

Three components make this pattern work:

Pydantic declares fields, like Stance, and types, like string or list

Enums constrain choices (yes/no, support/oppose/neutral, high/medium/low)

LiteLLM provides a portable interface so you aren’t rewriting provider-or model-specific glue to call the LLM.

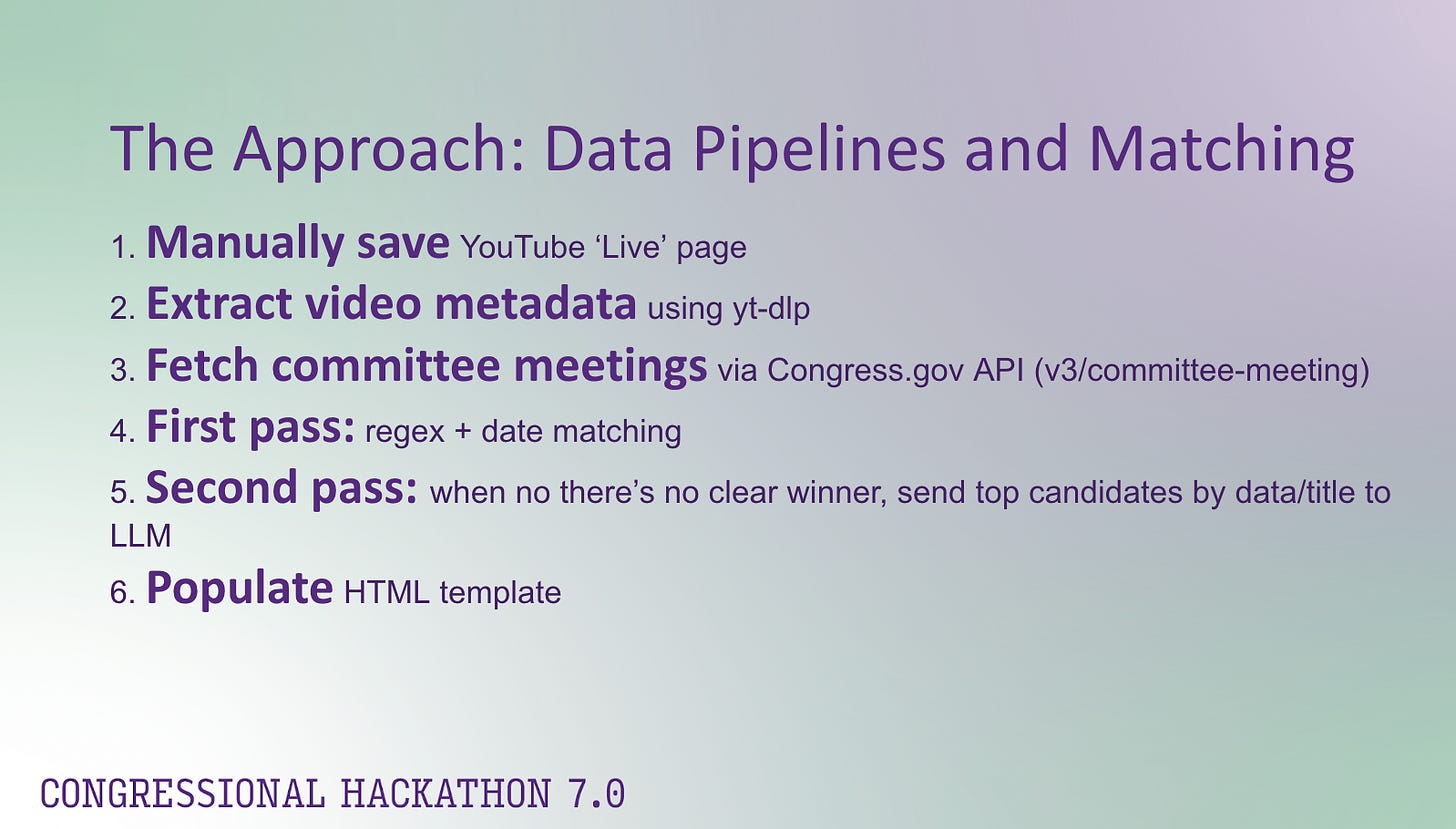

A Real Example: Matching Congressional Hearings to YouTube

For the Congressional hackathon recently, I needed to match YouTube videos of hearings (the first data set) with their official Congress.gov records (the second data set). The problem is that, while we have the titles and dates in both, they frequently don’t match exactly across data sets.

For instance, “Health Subcommittee Markup of 23 Bills” and “23 Pieces of Legislation” use almost entirely different words, but refer to the same event.

My approach:

First pass: Heuristics. Date matching and lexical title similarity (comparing the actual words, not the meaning)

Second pass: LLM adjudication. For uncertain cases, hand the top matches to an LLM and ask it to decide.

For the structured output, I asked for a match ID number (or None, if there’s no good match), a confidence level, and "reasoning”, or an explanation of the answer.

For this project, I didn’t actually use the confidence or reasoning fields for anything. The confidence score isn’t calibrated—it hasn’t been trained against labeled examples, so it’s just a guess. And the reasoning field isn’t the model’s actual decision process, but a post-hoc justification. Both can still be handy for debugging or flagging cases to review.

Where I’ve Used This

I’ve used variations of this pattern for:

Analyzing public comments: First, discovering what themes people discuss, then classifying each comment using those themes

Processing research papers: Extracting summaries, key contributions, methods used

Evaluating synthetic data: Checking if LLM-generated content meets specific criteria

Same building block, different structure.

When It Doesn’t Work

I had one dataset where this pattern just wouldn’t work with certain providers: I wasn't able to consistently get back the structure I wanted. It worked fine with one model but failed with others. I tried multiple different tools for getting structured output across providers until I gave up and said “well, I guess I’m just using this with Ollama,” which is a tool for running LLMs locally on your own machine.

But since then I’ve used this on probably 200,000 documents across a dozen projects, and that’s the only failure I’ve gotten. Your mileage will vary with provider and data weirdness. If you see failures—where models keep breaking your schema despite using structured output—you can try heavier-duty tools.

For instance, there’s Instructor, which does both output validation (as opposed to just passing the class to the model) and automatic retries if there are failure. I don’t use it because I haven’t needed it, but it’s out there if you do.

Also, not every model supports structured output. LiteLLM provides an API endpoint for checking whether a particular model supports it, which is helpful when you’re trying different models.

The Bigger Pattern

This structured output technique is part of a bigger pattern: most LLM applications I see aren’t chatbots or RAG systems. They’re document processing pipelines that ask the same questions of many documents.

When you need to process a pile of text and extract the same information from each one, this pattern is a good one. Same questions, many documents, structured answers.

The code for my YouTube matcher is on GitHub if you want to see the full implementation. But honestly, the pattern above is most of what you need to know. Define your structure, constrain your choices, and let the model do the work.

Have you tried Posits chatlas library? It also lets you define structured responses, using pydantic.

https://posit-dev.github.io/chatlas/get-started/structured-data.html