The ChatGPT Code Interpreter Is A Tool, Not An Analyst

Recently, OpenAI widely released Code Interpreter, their product for analyzing user-uploaded data sets. A lot of people are excited about this release, with some going as far as saying that this has the skills of consultants or analysts:

I’ve tested it out and found it useful, but also consistently incorrect.

When an LLM like ChatGPT gives you output, you should double-check it before you hit send or publish. If you don’t, you will get debacles like an article about Star Wars with a variety of mistakes. Over the past few months, people have been learning this.

It seems like with Code Interpreter, we will have to learn this lesson all over again. Code Interpreter can hand you professionally-formatted graphs which make it appear like it knows what it’s doing. However, like ChatGPT in general, it can still make mistakes in interpreting basic facts and can confidently suggest a wide variety of poor choices.

I’m going to write about a selection of the issues I ran into when I tested Code Interpreter on a variety of data analysis tasks, and then about how I think this can be useful — if you apply the same level of oversight to it as you should with all LLM content, even if this one feels different.

Code Interpreter Can’t Handle Basic Formatting Issues

I asked Code Interpreter to read in a file with school-level enrollment data. The second-to-last row was a “summary” row, the first cell of which contained “DCPS Schools Total.” The “school name” field was blank. Code Interpreter treated this as a school (with 48,000 students!) when it calculated the total number of schools and students in the data set. It did comment when it calculated the biggest school that the school name was missing – but it also didn’t say how many students it had, and you could have easily ignored this if you weren’t interested in (or didn’t ask about) the biggest school. This means that nearly all of the data it returned - the count of schools, the total number of students, and the average student count - was incorrect.

I then asked it to merge the enrollment data with another school-level data set. I told it that the names of the schools might be slightly different across data sets, but that it should still merge them. Not only did it fail to determine that, for instance, Woodrow Wilson HS was the same as Woodrow Wilson High School, but it didn’t report that few of the names found matches across data sets. Anyone casually glancing at the graph it produced with the (incorrectly) merged data could have assumed it was complete.

Code Interpreter Makes Weird and Wrong Model Choices

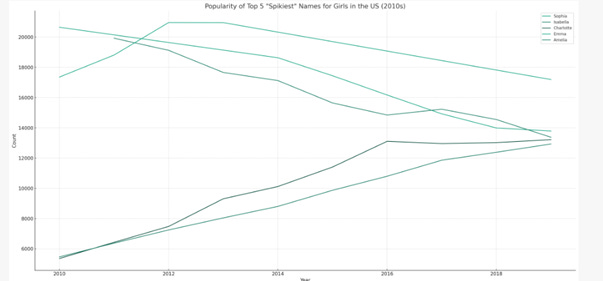

I gave ChatGPT a data set with the counts of baby names by year with the goal of finding “spiky” baby names, or names that suddenly gain in popularity and then suddenly fall. It started off by giving me names that, over the period in question, were almost entirely either up or down, with no sharp changes year-to-year. Despite many iterations of prompting, it never found the spiky names – even when I gave it operational, running code that produced what I was looking for. As an extra problem, the results it gave me in its text replies were sometimes different from what was shown on the graphs, even within the same prompt response: the answers it reported were different from what the code it gave me produced.

I gave it a data set with two variables and asked it to divide it into groups of low vs. high on both variables. It divided it using the medians for each variable - even though the result were four groups that varied significantly in size, and where there was a lot of in-group variation. That is, the groups didn’t make sense as groups – this was the wrong model to use.

When I tried to get it to solve a problem to find missing variables, instead of using equations to solve for the data like I’d asked, it just wanted to fill in numbers. When I indicated that the numbers were between 0 and 10, it simply filled in 5s for the missing variables, presumably because 5 is the midpoint of that range.

Code Interpreter Errors Out

Sometimes, Code Interpreter would simply error out instead of producing results. In some ways, these cases concern me less. They make the user experience worse, but it’s at least clear that’s what’s happening.

I asked what packages were available, it gave me a list, and then it errored when it tried to import several of them. One of the packages it tried but failed to import was plotly, a very popular graphing library.

I asked it to generate a requirements.txt file (a list of all of the packages and their version numbers that a Python project depends on). It tried five times and failed before it produced a response.

This is just a selection of the types of things I have run into. That said, Code Interpreter isn’t unusable — you just have to remember that it has extremely poor data intuitions, and watch it every step of the way.

How to Use Code Interpreter As An Assistant

Here’s what I would suggest for how to use this tool:

Look at your data in detail before you give it to Code Interpreter. Is there anything weird about it? Are there rows which shouldn’t be included, particularly at the bottom or interspersed throughout the data? Either strip them out, or tell Code Interpreter how to handle them.

What should it do with missing data? What does missing data mean, in the context of your data set? If you don’t know the answers to these, well, ChatGPT doesn’t either, so don’t just go with whatever it defaults to. It can tell you things like how many rows have missing values. However, if your data set uses a certain value to represent 'missing' data, it might not interpret that correctly. Again, Code Interpreter is no substitute for looking at your own data.

Think through every other choice you want it to make, from what kind of graph to what kind of model. Ask a lot of questions. If you’re merging in data sets, ask whether every pair was found, and which weren’t.

If you want to use numbers or charts that Code Interpreter creates for you, ask for the code. Specifically, ask for a .py file that does all of the steps from start to finish to generate that number or graph: it’ll generate one for you to download. Don’t just copy the code snippet it wrote in the most recent response, because that might not include previous data cleaning that it did when it initially read in the file. Ask it to document each line of code. Read the code. Run the code on your own system. And save it so you can share and replicate. This may seem like it kills some of the value of using Code Interpreter. But without it, your results may not even be reproducible, much less correct. Again, I got text responses summarizing data from ChatGPT which were different than what you would have gotten if you ran the code it generated.

When I used Code Interpreter in a step-by-step, holding-its-hand kind of way, I got useful and accurate results faster than I would have gotten them coding it myself - and much faster (and likely more accurately) than I would have gotten from processing the data in Excel.

For instance, when I explicitly told it to cluster my data, it did that immediately and well. When I asked it to change the formatting of my graphs–things like changing the axis formatting to include commas, or changing the legend location–it did that as well. Similar to using ChatGPT for coding, it gives better results with very specific instructions. And if you want to transition from Excel to Python, especially starting off with data sets that you already know well, I think this is a good tool.

The Illusion of Correctness

Calling ChatGPT a glorified autocorrect is a little unfair. It’s an incredibly glorified autocorrect that can do a lot of truly impressive synthesis of text, including coding, which is a kind of synthesis of text as well. It’s a big part of my coding process, and my coding process is better for it.

But I’m telling you, as someone who has a lot of experience both coding and analyzing data – this is, at best, a useful assistant. It can help you go faster. It can certainly write code for you. But you should not outsource your data analysis decisions to it, no matter how confidently it asserts its findings or how pretty its graphs are.