Named Entity Recognition Just Got a Lot Easier

I used GPT to find software and programming languages in USAJobs Listings. It was a big step up.

Data analysts, data scientists, and software developers place importance on the tools provided by their employers. For instance, someone who prefers working with Python may not appreciate being limited to Power BI, and the same applies in reverse.

A job listing is usually the first opportunity to introduce a position and organization to a candidate, so it’s important to share what tools and software the job involves. But USAJobs ads, including for data science and other data-intensive or technical jobs, frequently don’t do this.

The government is already at a disadvantage when it comes to technical recruiting. They almost never have recruiters. (Directorate for Digital Services has, but I’ve never heard of anyone else having them.) They also frequently can't match private sector salaries.

And for job candidates, the issue of job listings isn't just "what tools am I going to use?" (Although that's important.) It also gets at how organized and together the office is when it comes to technical staff. If technical people weren’t involved enough with writing the job ad to include this kind of information, that’s a bad sign.

Previous Efforts to Study This: Keyword Searches

A few years ago, when I was working for the Army doing People Analytics, I tried to quantify the degree to which the Army's job listings were mentioning particular tools or software on our USAJobs listings. And I found that we mostly were not.

But at the time, my method for looking for tools and software in text was very basic – I was looking for strings, or sequences of text like “Python.” I was probably not being case-sensitive – like, it would have picked up on “python” as well. I might have been using regular expressions, which let you look for more complicated patterns in text. But basically, it was “Ctrl-F”, but scripted and automated.

The results were still accurate, but they weren’t as systematic as they could have been because I was only going to find the specific tools and software that I searched for.

More recently, I re-implemented this process using GPT to do Named Entity Recognition. It does a lot better. It’s more comprehensive and more accurate. And it shows this issue of lack of of tools or software in job listings across the federal government.

Named Entity Recognition: Pinpointing Key Information

Named Entity Recognition (NER) is a technique used in natural language processing to identify and categorize important parts of text, such as names of people, organizations, locations, dates, and other specific terms. Named entities don’t have to be capitalized, but they frequently are. The same word may be a named entity in one context but not another:

For example, consider the following sentence:

“Monopoly is derived from The Landlord's Game, created by Lizzie Magie in the United States

In that sentence, the named entities are “Monopoly”, “The Landlord's Game”, “Lizzie Magie”, and “United States.” In a different sentence, like “AT&T has a monopoly on phone service”, the word “monopoly” would not be a named entity. (But AT&T would be.)

There are existing tools for doing Named Entity Recognition. A major one is a tool named spaCy. I didn’t try it last time I was doing this, but I tried it this time.

Here’s the code I ran in Python to get a sample text snippet and extract all of the entities from it.

import spacy # this loads spacy (you have to install it first)

nlp = spacy.load("en_core_web_md") # loads the core model

text = "I use Python, Java, and C++ for programming and work with TensorFlow and Django frameworks."

doc = nlp(text)

print(doc.ents)

The result was (Java, TensorFlow, Django). It didn’t find Python or C++. I tried again with a larger spacy model ("en_core_web_lg"), and this time it found Python and C++ but didn’t find Django*.

It would be possible to spend time working with Spacy to train a new model.

Alternatively, we can write a very small amount of code to get GPT to find the entities.

My GPT Prompt

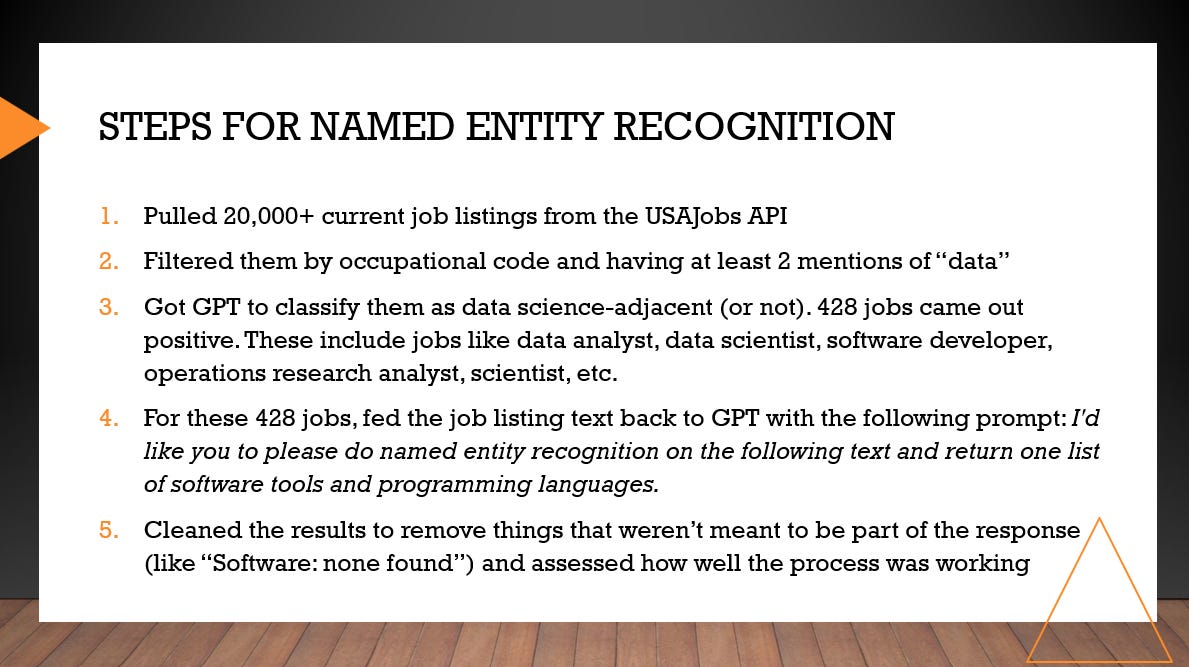

I previously used the USAJobs API to pull about 20,000 currently-listed jobs, coded about 2,000 of them as possibly data-related, and then used GPT to select the 428 which were data science or data science-adjacent. These are job listings for operations research analysts, software developers, information technology, scientists— a broad set of data-intensive and technical jobs.

This time, I iterated through each of my 428 job listings and fed the text of each job ad to GPT.

Here’s the prompt I gave to GPT to get it to find tools and software: I'd like you to please do named entity recognition on the following text and return one list of software tools and programming languages.

Example Results

The most common output I got was some variation of:

Software tools: None

Programming languages: None

Other responses include:

Software tools: USAJOBS Resume Builder, BI, RPA systems

Programming languages: None mentioned.

Software tools: Dredged Material Information System (DMIS), geographic information system (GIS)

Programming languages: None mentioned.

Software tools: GeoBase Program, AutoDesk MAP, AutoCAD, ArcGIS, MS SQL, Oracle, MS Access

Programming languages: None mentioned.

It would have been challenging to find all of these with just a keyword search because there was such a large variety of tools, and because I didn’t know going in what those tools would be.

Assessing Results: How Did GPT Perform?

I cleaned up the results to just get one list for each ad. For instance, for one of my examples above, the result would be ["USAJOBS Resume Builder", "BI", "RPA systems"].

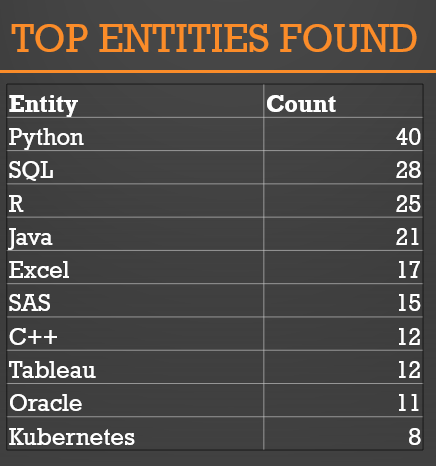

These were the top 10 entities I found, and these made sense in the context of a combination of data analyst, data scientist, and IT/developer jobs. The counts are distinct, meaning that, for instance, Python was found in 40 separate ads:

I then assessed the results from both ends:

Did GPT find stuff that wasn’t there? It did not. It did sometimes change the punctuation/formatting, or it would abstract something so that the phrase isn’t present in the original, like when it returned “'a scripting or programming language not specified” as an entity – it didn’t actually find a specific programming language, it just found that the ad was referring generically to a programming language. But it didn’t make stuff up.

Did GPT find the things that were there? For this, I looked at the top entities being found and searched every listing to see whether, if they were present, GPT was finding them. I found that GPT wasn’t 100% perfect, but it was very good. For instance, it didn’t extract “Java” from “integration with Java/Portal with SAP” or SQL from “Proficiency in writing and analyzing Linux shell script and SQL queries”. It also didn’t find SAS in at least one case where it definitely should have – but it also correctly didn’t find it when SAS was being used in a different context (“Science Analytics and Synthesis (SAS)”), so in general I think it did very well.

One area that these tests didn’t cover is whether there were named entities that GPT did not find at all, period. From doing spot checks, it didn’t catch everything all the time, but it was extremely good.

You can see the full results in terms of which entities are found and how many in the worldcloud. It did not not perform standardization. So, for instance, “Linux terminal” is listed separately from “Linux”, and there are at least three different ways “Microsoft Power BI” is getting listed. We could fix that if we wanted either manually or by feeding it back to GPT and asking it to standardize.

But this is also a mark of how successful this process was and how difficult it would be to replicate via keyword searches. GPT figured out that all of these are entities without having to create the kind of extremely specific keyword searches it would take to find all of the different ways someone might list a tool or software.

It also pulled out some phrases that I wouldn’t have pulled out, like “open-source policies” and “multiple program systems”. These were in paragraphs with other tools and software that it also (correctly) pulled out, and I wonder if that context was why it identified those phrases as named entities as well.

USAJobs Ads With No Named Entities

So, does the government list software or tools in their job ads for data science and data science-adjacent jobs? Mostly not – and with GPT, we can quantify this well and be more confident in the results.

Of the 428 jobs I coded, 271 had none, and a few more only mentioned Microsoft or Microsoft Office.

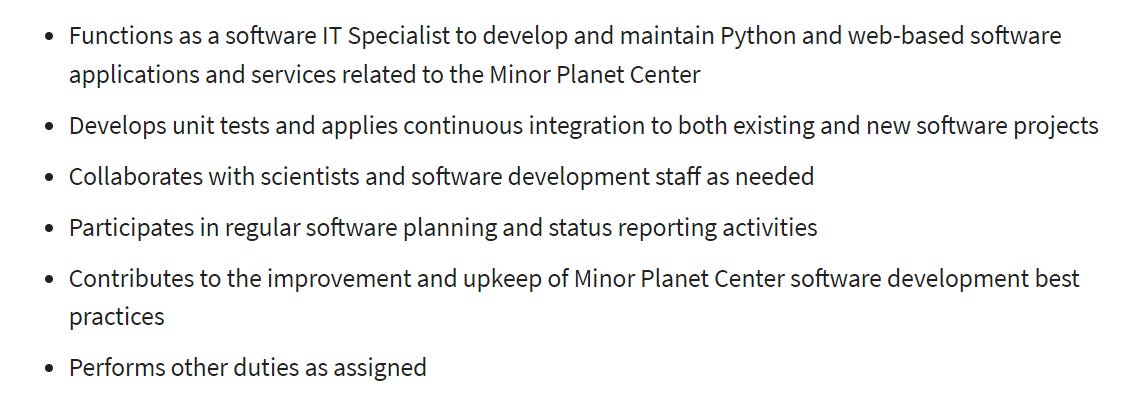

This is an example with no named entities that I picked randomly. It’s an Operations Research job with the Air Force.

Here’s the “Major Duties” section for the higher level that the job is being hired for.

They’re doing a lot with data. Are they coding? I do not know, and I definitely don’t know what they’re coding in. If code-first data science is important to a candidate, is this a good job for them? It's not clear.

USAJobs Ads With Too Many Named Entities

On the other end, there are job listings with so many things listed that it’s also hard to tell what the job involves or what they’re looking for in candidates.

For the 40 listings with “Python” in them, the median number of total entities found was 7.5. This is an excerpt from an IT specialist job for the VA with 24:

This is such a broad set of tools that it could describe a huge range of candidates. And some of the tools don’t correspond to the categories they’re under.

A Great Job Ad!

I wanted to see if, just by looking at the named entities, I could pick out a great job ad. I was looking for a list of software or languages that make sense together – for instance, that represent different parts of a tech stack rather than a bunch of different tools that you use for the same thing (like “[Excel, VBA, R, Python, SAS, R, Python]”)

This IT Specialist (Applications Software) position from the Smithsonian has the following list of entities, which meet that criterion: Docker, Git, PostgreSQL, Python, GitHub. These are all different tools that do different things! (Also, they’re good tools!)

This is the Duties section of that job:

This is the Qualifications section for candidates coming in as a GS-12:

This is clearly written. It’s clear what the job entails, it’s clear what the qualifications are, and it’s clear how the qualifications tie into the duties. I would be surprised if a technical person didn’t write this job ad, or at least have significant input into it. This is a good job ad!

Conclusion

There are three general ways I’m extracting information from text using GPT these days: classification, summarization, and named entity recognition. All of these were things that could have been done previously to some extent in Python – and named entity recognition, the most easily. But the way I have been doing this – and I think the way a lot of people have been – was via ad hoc keyword searches. With GPT, I can quickly and easily do better – and quickly evaluate how well it’s working.

And this information – the extracted entities – do have predictive value. I was able to see the “Docker, Git, PostgreSQL, Python, GitHub” list and suspect that this was going to be a pretty good job listing.

As always, my code for this exercise is on GitHub, as well as the Excel file that was my result.

If you have a similar use case, particularly if you want to improve federal hiring and you need some data and analysis, please send me an email.

_________________

*I also analyzed all of the text that I gave to GPT via spaCy’s large model. That’s included in the code. The spaCy results are also in the Excel file that’s in the GitHub repo, so you can look at it if you want. The comparison between GPT and spaCy results could be its own post, but the summary is that it failed to find some of the entities, but also that the entity types it has built-in aren’t useful for this project. It doesn’t have anything as a granular as “software tools or programming languages.” It thought ‘Git’ was a person and 'MS Access' was an organization, which is a problem from a data cleaning perspective because it also found a lot of other people and organizations that we don’t care about when we’re looking just for software and tools, so a lot of manual cleaning would have been needed.

Excellent analysis, this really highlights a critical barrier. What do you think would be the most effective, scalable way to bridge this gap between technical reality and HR proceses?