Limitations of General Benchmarks for Language Models

Does it matter what ChatGPT's SAT score is? Should anyone care how it performs on the LSAT or an IQ test?

In one sense, it does matter. If you're administering a high-stakes exam, you’ll want to know how well AI models perform on questions currently in circulation – in case you need to take further steps to police their access or monitor testing results for suspicious patterns.

But do standardized test scores validated on people tell us anything about the general capabilities of a language model? That is, if Gemini 1.0 performs better than GPT-4 on the MCAT, is that model more capable in a broad sense? What if it performs better than half of all people versus ninety percent – does that signify something meaningful about its general capabilities relative to humans?

Not necessarily. A given score means something very different for a person than it does for a language model. Exploring this difference – and the limitations of general-purpose evaluations overall – can also help us think about how to create and validate benchmarks.

Tests as Proxies for Capabilities and Outcomes

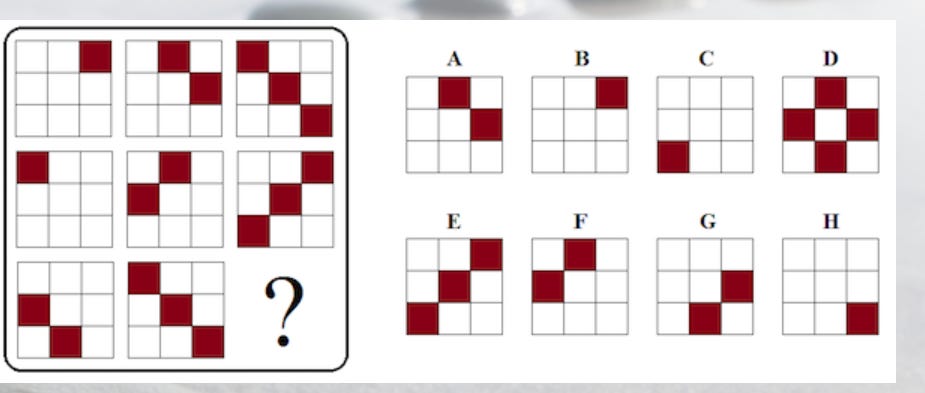

We administer standardized tests to people because we think performance on those tests tells us what that person knows beyond what’s on the test – or how they’ll perform in the future on some set of tasks or other outcomes. The distance between what we're assessing and what we actually care about depends on the test. For instance, Raven's Progressive Matrices is a non-verbal IQ test where you see an incomplete 3x3 grid and select which choice completes the bottom square. There are no tasks in your everyday life that mimic Raven's Progressive Matrices: the only reason someone might think it's interesting or useful is to the extent to which it's predictive of outcomes in a variety of areas that look nothing like the test.

On the other end of the spectrum, the Defense Language Proficiency Test (DLPT) measures a set of skills that are much closer to the actual topics of interest. This is relevant for jobs like being a translator. But it's still just a proxy: if you cheated by finding the actual test passages and questions ahead of time and memorizing them, it would make your score meaningless – again, because the point of the test isn't just to assess your performance on those specific questions, but to measure your broader language skills.

Something like the LSAT falls in between these two. There's some relationship between the types of reasoning questions asked on the LSAT and the kind of thinking you'll do in law school or even as a practicing lawyer, but it's more disconnected than, say, the DLPT and the work you'd do as a translator.

Differential Prediction and LLMs

People who study how candidates for schools or jobs are assessed talk about the concept of "differential prediction." It refers to how you can give different groups the same assessment, but the relationship between assessment performance and the outcome of interest is different. For instance, if you gave people with sight impairments a test of reading comprehension with no assistive devices – even though in their actual lives they have access to magnification devices and screen readers – their scores would systematically underpredict their performance.

A lot of users' observations regarding the weirdness of LLM behavior stems from differential prediction for humans vs. LLMs. They point out that the LLM can do Task A, which is impressive or difficult, but not Task B, which is very simple for a person. For instance, some models can write you a fluent essay but fail to count the letters in a word. Whereas among people, someone who can write even a bad essay on literature is unlikely to struggle with counting letter occurrences. This disconnect between capabilities which are closely linked in humans illustrates the differential prediction challenge when evaluating LLMs on tests designed for people.

One cause of differential prediction is memorization. If a model was fed a real test question and answers in its training data, we should expect it to perform well on that question, even if it can't answer similar questions. This is one reason why an LLM performing well on a test doesn’t necessarily indicate broader capabilities, and why one LLM may perform better than another on a given test but not on that subject in general.

But that's not the only issue. LLMs operate in a fundamentally different manner from humans. While humans leverage big conceptual models and broad patterns, today's LLMs find narrower types of patterns in text and generate new data based on those observed patterns. That still can lead to behavior that's distinct from pure memorization – they’re able to synthesize data and extrapolate to some degree – but because they work differently, the correlations between capabilities are also different.

And these issues are going to plague any attempt to gauge generalized capabilities via standardized tests that were made for people and validated on outcomes that matter for people. For instance, just because LSAT performance is correlated with passing the bar exam in people doesn't mean it will be in LLMs – and if passing the bar is correlated with being a good lawyer in humans*, that doesn't mean it'll be correlated in LLMs with the kinds of tasks lawyers do.

Existing Benchmarks and the Problem of General Capabilities

I’ve discussed some problems with using generalized benchmarks intended for humans. But what about the ones built to evaluate LLMs, like those that HuggingFace tracks for its leaderboard? Are they good evaluators of LLM performance overall?

Kind of. There’s some relationship between performance on those metrics and peoples’ self-reported experiences with those models. But there are also problems, including the sensitivity of models to things like the order of the choices or method of answer selection.

Even fixing those problems, we get back to this issue of, what’s the thing we’re actually trying to measure? There was a New York Times article in April which argued that the fact that “we don’t really know how smart they are” was an evaluation problem. The author hoped for a “kind of Wirecutter-style publication…to take on the task of reviewing new A.I. products.”

But evaluating AI models isn’t like evaluating chairs or headphones, where there’s a fairly narrow set of criteria that might be relevant. People want to use LLMs for many different types of tasks. Among humans, you wouldn’t expect an amazing correlation between the quality of the poetry a person writes and their scores on biology tests. You’d probably expect some, and that’s about what we see with current LLM benchmarks. But the broader the set of tasks you want to use a model for, the less likely it is that you’ll be able to develop a general-purpose test that can tell you which model to use**.

And there is a parallel there for humans – when it comes to hiring, we often rely on work samples or task-specific assessments rather than just general aptitude tests. A software company might ask candidates to write code to solve a specific problem, or a marketing firm might ask for a writing sample tailored to a particular audience. These evaluations are designed to directly measure the skills that matter most for the job at hand. We expect the evaluation of people to be both specific and, nonetheless, imperfect.

Likewise, for LLMs, maybe the answer lies in targeted evaluations that align closely with the specific capabilities we care about for any given application. While general-purpose benchmarks can provide a useful overview, they're unlikely to be great proxies for performance on the wide range of tasks LLMs might be used for – whether they were initially benchmarks intended for humans, or for language models.

What Does This Mean for My Benchmark?

So how can we create more targeted benchmarks aligned with specific use cases, while still being able to easily run an evaluation?

A recent paper titled Multiple-Choice Questions are Efficient and Robust LLM Evaluators gives an example of how to test a multiple-choice benchmark for open-ended tasks. The authors show that a model’s ability to solve open-ended math problems is strongly correlated with its ability to solve multiple-choice versions of those same problems. They also convert some existing code generation benchmarks – open-ended questions where the model produces code in response to questions – into multiple-choice tests, and again find correlation in performance. The advantage of these new benchmarks is that it's easier and faster to run/grade them, compared to open-ended tests.

This can show us how to do something similar with our own specific metrics.

For example, let's say your goal is to assess whether an LLM could provide instructions on a particular task. Break that task down into a set of steps that the model would have to output in order to be useful, and begin with asking open-ended questions.

Next, have human experts grade the model's open-ended responses for whether they contain the information you’re looking for, and/or use a grading rubric in conjunction with an LLM grader. In parallel, you could construct simplified prompts, multiple-choice questions, fill-in-the-blanks, etc. related either to the same task or to the broader field, and compare model performance to identify which formats correlate well with performance on the open-ended assessment. Now, this is where you might run into issues like memorization – if you try giving the model an existing test and it’s successful due to memorization, then performance on that test likely won’t proxy well for your open-ended task.

If you create something that proxies well across a range of models, you have a benchmark for that task. Now do it a few more times with a few other related tasks. At a certain point, if it seems like your easy-to-measure proxies work well for your tasks of interest, then you have a method for testing tasks in general.

The issue you'll still run into is generalizability across new models and tasks. If we come up with a benchmark that proxies well in some set of models for certain tasks, will it keep proxying well on new model architectures or task types? Just as standardized tests for humans need to be re-validated, any LLM proxy benchmark whose integrity is predicated on these relationships should be periodically re-assessed to ensure the relationships between it and the capabilities of interest remain valid as the underlying systems evolve.

And if you do this, you’ll know what a given score means in terms of capabilities. It will be connected to an actual outcome. It won’t be a general-purpose evaluation which can cover everything from biology tests to poetry writing, but it will give you information about the specific tasks you’re trying to use the model for.

__________________________

*This has actually been studied to some degree, although, as the authors discuss, there’s an inherent range restriction issue: you can’t observe the performance of people who never passed the bar, because they didn’t become lawyers. https://arc.accesslex.org/cgi/viewcontent.cgi?article=1083&context=grantee

**This is also why if you’re evaluating a model for bias, you need to do so on your specific task.