Large language models like GPT-4 are going to change how we write code, at a minimum assisting with a lot of the rote tasks like drafting documentation and writing test cases. They may eventually do much more than that, and I don’t think anyone knows how this is going to affect labor market outcomes for programmers. You might think this is an odd time to learn to code.

However, if you’re already cleaning and analyzing data in Excel or Alteryx, or making dashboards in tools like Qlik or Tableau, then GPT-4 makes the value proposition associated with learning to code much better.

The Promise of No-Code and Low-Code Tools

The promise of no-code and low-code tools for cleaning, analyzing, and visualizing data was a faster learning curve, allowing for people to do these tasks without learning to code. This allows workers to specialize in these tools and to develop expertise in a particular subject, like business or accounting, while still being able to analyze data associated with their area of expertise.

But there are a lot of costs associated with this model.

First, there are several challenges with transparency and replication when using these tools. These challenges make it difficult for others to evaluate and build upon your work, and also make it harder for you to catch your own mistakes. Here's a breakdown of the main issues, not all of which are going to apply to every tool.

Mixed data and code: In a graphical user interface, your data and processes - the things you’re doing to the data - are often intertwined. This makes it difficult to isolate and review the code, unlike in a text-based programming language where you can clearly see the operations being performed on the data.

Lack of function-level documentation: Due to the graphical nature of some major low-code and no-code tools, it's challenging to write documentation for each step of your analysis. In contrast, when using a code-based approach, you can easily add comments or create function-level documentation that explains the purpose and function of each code segment.

Version control limitations: While version control tools exist for these tools, they don't offer the same functionality as those used with text-based programming languages. Graphical approaches make it difficult to compare different versions of a file side-by-side and see changes or determine which to keep when merging - if that’s an option. Additionally, version control tools for these programs are not as widely-used as they are for code: there’s a bigger gap between the way version control theoretically could be used and how it’s actually used for no-code and low-code tools than there is for code.

You also may get stuck in a particular proprietary ecosystem. For example, Alteryx is a tool you can use to read in, clean, analyze, and visualize your data. The selling point is that you can easily do all of these things without code. However, this ease-of-use comes with a cost. If you want to leave, you’ll have to undertake a large, costly migration project, because your workflows are saved in .xml files that you can’t easily bring into any other tool or language.

By contrast, when you’re working in a programming language like Python, your code lives (or should live) in git repositories. You might still pay someone for servers and to host your dashboards, but if you decide to change that (or do it yourself), you’re not going to have to start from scratch.

Another cost to low-code or no-code ecosystems is that they often don’t come with the robust functionality and community resources that programming languages such as R and Python do.

For instance, let's say you have a collection of Excel files you want to use as source data. You need to read each tab from each file as a table or data frame, add one column with the tab name as its value, and another column with the name of the Excel file. Then, you want to concatenate all those files together. In Python, this task can be accomplished with just a few lines of code. Maybe it's not impossible to achieve this using a drag-and-drop tool, but there is a reason people often resort to manual methods or avoid this sort of task altogether.

A common rejoinder to this is that if you’re paying someone, you’re getting a level of customer support you’re not getting with open-source tools. That’s true, but if you want to pay someone to support your open-source tools, you can do that as well, and you’re still in less of a high-exit-costs situation than you are with a proprietary tool.

But The Costs Of Coding Were Real, Too

But even with all of these issues, there were still a lot of contexts in which it made more sense to be using these no-code and low-code tools instead of coding. A big reason was the short learning curve associated with using these tools.

In the best-case scenario, or even just the pretty-good one, coding tools offer more accuracy, transparency, and replicability than drag-and-drop tools. But if your Excel expert is asked to start coding in R or Python, it could lead to worse outcomes if they don't understand the code they're writing or if they're not using good coding practices. Additionally, because learning to code is harder than learning to use Excel, this limits the availability of skilled employees who can code.

But now, GPT-4 has the ability to go from narrow descriptions of what you want to build straight to working code, and to explain to you along the way what it’s doing and why. The learning curve for coding has collapsed, dramatically reducing the advantage of drag-and-drop tools.

How To Use GPT To Write Code You Understand

If you’re already cleaning, analyzing, or visualizing data, then you already have a handle on the kinds of inputs to give GPT. GPT is going to be helpful because you already know what you’re trying to do–you have the concept–you just need help translating it into code.

1) Going from Concept to Code

I wanted to use GPT-4 to code in a language that I don’t work in, as a way of imitating as best I could what someone who is going from no-code or low-code tools to a programing language would do. It’s not a perfect analogy, because I know broadly what’s going to be possible, so I know what to ask for.

Here are some of the prompt types I gave it, after specifying that I wanted the results written in R and that I’m very much not proficient in R – I’ve written a very small amount of code in it over the years, but I’m still at the level where I would have to look up every line I wanted to write.

“I want to automate downloading this file, unzipping it, and putting it into a dataframe or table or whatever: https://datasets.imdbws.com/name.basics.tsv.gz”

“I want to generate a table with this data. This is how it's structured [pasted documentation on the column names and descriptions from https://www.imdb.com/interfaces/] I want to pull first names out of the nconst field (I guess by splitting it and taking the first part?), put those in a field called "firstName", and then I want to create a new table/dataframe that aggregates to get counts of each firstName”

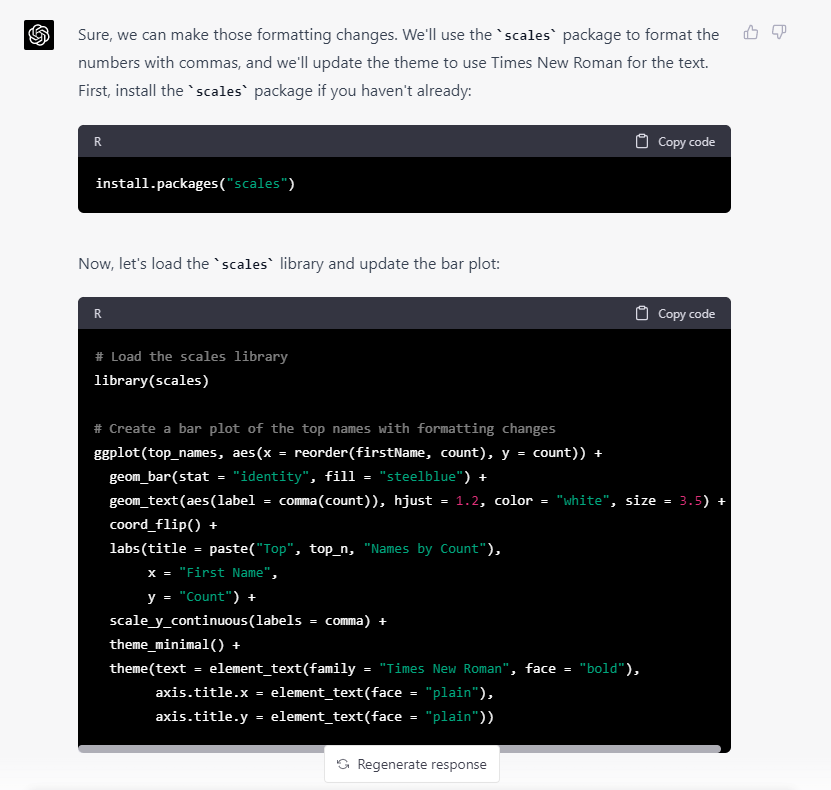

“I want to make a bar chart with the top 10 names in terms of counts, I want it in Arial, with 14 pt font for the title and 12 pt font for the labels, and I want the title to be "Top 10 Count by Name" Can you write me code?.” Followed by: “I want to make some formatting changes. I want the number labels to either be formatted with commas, or if there's some automated way that it can look at the numbers and determine a good unit -- like "thousands" or "millions"”

You can see with all of these prompts that I still need to know what I want to do – although if I wanted, for instance, a discussion of different graph types, that would also be possible to have. But if you already know what you’re doing conceptually, you’re well-positioned to easily get the code to do it.

You should expect to be doing some troubleshooting: some of the code will not work on the first try, particularly if you’re asking it to do more complicated things. But you were going to be writing code that didn’t work and troubleshooting it any way you started coding, and the process will be faster here — and if you can reduce what would have been a frustrating two-hour debugging experience to just a few minutes, you can get a lot more done, and you’ll be more likely to stick with it.

2) Ask It to Explain to You What It’s Doing

You can definitely use GPT to produce code that you don’t understand but does run. I do not think this is a good idea! But you can also use it in a way that will help you learn what the code doing and how to modify it if it’s not doing what you want.

Here are some of the type of prompts GPT can answer:

Can you please explain to me in detail what every piece of this means?

Can you explain to me in a really detailed way, like line-by-line, what every part of this is doing?

Can you explain to me what a data frame is?

Can you explain to me what a function is?

These last two, of course, you can Google – but GPT is explaining them to you in the context of the conversation you’re already having, including that you’re looking for answers to those in the context of a brand-new R programmer. And you can also ask follow-up questions.

If you want to learn to code independently, I recommend using this as a starting-off point where you ask these kinds of questions and use the answers to troubleshoot, modify, and write your own code, and also in conjunction with the kinds of resources you would have used before, like classes and books.

3) Learning the Broader Coding Ecosystem

There are some practices that will help you get the full benefits of using code rather than low-code or no-code tools, but there are learning curves associated with these as well. Probably the best example of this is git, which is a tool for version control, or managing different versions of the same file or files. If you’re writing code, you need version control. Git is fairly easy to get started with, but it’s not nothing, and you can get yourself into some situations that are annoying and unclear how to resolve.

But if you ask GPT for best practices you should be using as a new developer or data scientist, version control is one of the practices it will tell you about – and then it will tell you how to use it.

This is the beginning of the response I got when I gave GPT-4 the following prompt: “can you help me get started, as a total beginner, using git and github?”

“Sure, I can definitely help you get started with Git and GitHub!

Git is a version control system that allows you to track changes to your code over time. It can be used locally on your computer, or with a remote repository service like GitHub. GitHub is a popular web-based platform for hosting Git repositories.

Here are the general steps to getting started with Git and GitHub:

Install Git on your computer. You can download Git from the official website: https://git-scm.com/downloads.

Create a GitHub account. Go to the GitHub website and sign up for a free account.

Set up your Git configuration. Open up your terminal or command prompt and type in the following commands:

It then gave me the commands to get started with creating a repository, adding files to it and pushing it to GitHub. There are a million resources online already for doing this – this isn’t secret information. But it’s still reducing the costs because you don’t have to seek them out, and then if something doesn’t go smoothly, you can then ask GPT follow-up questions involving the behavior you’re seeing/error messages you’re getting.

This is just one example of best practices in the larger coding ecosystem that GPT can suggest to you and/or walk you through. (Others, in case you want to know what to ask about, include using a virtual environment, writing documentation, writing descriptive variable and function names, and testing your code.)

The Path Forward

We don’t know what the full impact of large language models is going to be on the future of coding. But the present of coding–coding right now–is that it just got a lot more accessible to individuals and organizations which are currently using no-code and low-code tools for their data cleaning, analysis, and visualization. The learning curve has changed and the trade-offs have changed with it. You can reap a lot of the benefits of coding and coding well – the transparency, the replicability, the ability to use version control, and escape or avoid some monopolistic, proprietary environments with high exit costs–in a way that gets you up and running faster.

You can wait to see where large language models are going to go, or you can get started now.