Fail Quickly and Don’t Solve The Coding Problems You Don’t Need to Solve

A few days ago, I was trying to fine-tune an open-source Large Language Model (LLM) - or, take an existing model and tweak it with new data - on my Windows laptop. I hadn’t done this before, so nearly every piece of this project was new to me.

There was a Python package I wanted to use, but I was struggling to get it working. Initially, I thought it was because it was incompatible with other packages that I was running, but then I realized the issue might be that it couldn’t run on my version of Python. I was going around in circles fighting with Windows and Python for about an hour before I paused and thought about what I was doing.

Managing Python versions and package dependencies in Windows is a notoriously frustrating task*. But it was also one that I realized I didn’t actually need to solve, because ultimately this wasn’t going to wind up running on Windows – if I could get this running, ultimately it was going to run on Linux, where these issues are, blissfully, much more solved. So why was I fighting to solve a problem that wasn’t actually a necessary part of this project?

The next part of this story is that I transferred this to my Linux server, it immediately worked, and then I went in another direction anyway.

I realized as this was happening that one of the things that’s made me a more efficient data scientist over the years is that it takes me less time – although still more time than I’d like – to figure out what problems I actually need to solve and what problems I don’t. This means I spend less time trying to solve the ones I don’t need to solve – and that if a project is going to fail, I’m able to figure that out sooner and fail faster.

I think this is a useful framework to have if you’re getting started with coding, and it’s one I wish I had earlier.

Each Section Of Your Project Takes Inputs, Returns Outputs

I’m going to use my “fine-tune an LLM project” as an example. I started out thinking there were going to be three sections of this project:

Grab some recently-generated text data - in this case, just-published news articles. Inputs: None. Outputs: Unstructured text data.

Algorithmically transform them into labeled data. Inputs: unstructured text data. Outputs: Structured text data, like Python dictionaries, or JSON files, that contain an instruction, an input, and an output. The instruction could be something like “summarize this data”, and then the input would be a longer piece of data and the output a shorter, summarized version.

Take that labeled data and use it to fine-tune an existing LLM. Inputs: Model, structured text data. Outputs: New version of that model.

Each of these pieces can be done separately from each other – in any order, or simultaneously - so long as we are able to mock up a version of the inputs for the relevant section.

For instance, I could take existing labeled data and an LLM and use that to fine-tune the LLM, and then later try to solve the “generate labeled data” problem.

But each of these parts aren’t equal in terms of how central they are to my project, or in terms of whether I might fail to get them working.

Fail Quickly If You’re Going to Fail

Frequently when I’m starting on a project, I don’t know if it’s going to work out. Maybe I’m trying to analyze a data set – but does the variable I want even exist? Is it populated enough that I can actually use it? Or there’s an API – but do I have the permissions or technical knowledge to get access to it? Or a package - but do I have the memory to run it, or am I allowed to get it installed in the environment where I would need it?

If there’s no project because I can’t get the crucial-and-might-not-work part working, I want to figure that out as fast as possible, even if, process-wise, it’s going to be the last part of my code to run. I don’t want to put in a lot of work doing all the steps leading up to this step if the thing that actually makes this a project is going to fail.

In this case, the needs-to-happen-the-most step is the last one for me. If I can’t algorithmically generate structured data, there’s still the possibility of generating it manually. If I can’t pull data from the internet – ok, I can pull data from the internet – but if for whatever reason, I couldn’t, it doesn’t actually matter because the use case this is ultimately intended for doesn’t require pulling data from the internet.

But if I can’t train the model, there’s no there there. So I wanted as quickly as possible to see if I could do it.

Don’t Solve The Problem You Don’t Need To Solve

Where this started was that I was an hour into trying to figure out how to get this package installed on my Windows computer and fighting with various Python dependency issues, including the version of Python that I was running. This was a frustrating hour and a waste of time, but at least I only fought with it for an hour before just doing it on my Linux server instead.

But I think back to earlier stages of coding where I spent a lot more hours trying to solve things that actually weren’t integral to the project – and I look forward to a future date where it’ll only take me five minutes to realize I don’t need to solve this problem.

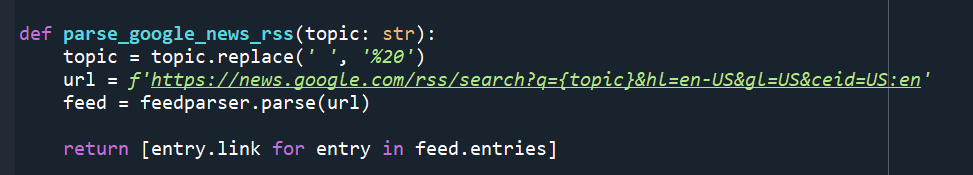

Another problem that came up in this project that I did, at least, immediately realize I didn’t need to solve was when I was scraping news articles. This was an extremely fast part: I used Google news and the Python requests library and pulled some articles. But when some of them didn’t pull - I was getting error messages - I immediately wrote into my code to just skip them if they didn’t work, because this isn’t a project where I try to scrape every possible news website, this is a project where I need some unstructured text data to work with, and it doesn’t really matter what.

Conclusion

Technical skills – being able to code the thing you need to code – are a big part of skill and effectiveness as a coder. But you’ll never be able to code everything, and certainly not efficiently, so ruthlessly triaging what is important and might not work, what you definitely don’t need to solve, and everything else, is also a useful skill that can make you a more productive (and happier!) coder.

And also, if you are fighting with Python versions or dependencies or a host of other issues, and you're using Windows, you should probably use Linux instead.

*Yes, I was using a virtual environment.

The big concept in this is the core difference between experts and non-experts in any given field. Experts aren't faster at doing things, they just spend less time on paths that won't work. They also recognize when something isn't going to work sooner / have better heuristics for knowing they're on the right track.

Sometimes I think the best path to developing expertise is just being allowed to explore the paths that don't work deep enough that you actually hit the point of failure. It sticks better than someone saying "go this way because that way won't work".